It’s data that makes the largest technology companies so valuable.

As part of Snowflake’s buzzy IPO this week, I want to dive more into why data matters, where the underlying value is, and what companies are looking into to improve their capabilities to work with data.

In 2010, the number of large enterprises with a Chief Data Officer (CDO) was 15. By2017, it was up to 4,000. In 2020, it’ll be over 10,000. Why? Data is revenue and revenue is sexy.

Every company is collecting more data than ever. Data is by definition connected but duplication and dependencies create complexity. Nowadays, there is an increasing demand (and number of people) for data access and analysis outside of product and engineering. Each team is now using its own data systems, develops its proprietary data products: analyses, dashboards, machine learning systems, even new product features. Companies are hiring analysts, adopt BI tools (i.e. Tableau, Looker, Mode) and move to cloud warehouses (i.e. Snowflake, BigQuery, Redshift).

The host of storage and database solutions have exploded, all made for purpose but adding to complexity and expertise. Data is expensive to store, transfer, and process (in time or $$) — so companies are always looking to optimize it.

We are also seeing a more flexible delivery model, people want to control data themselves and prevent data breaches. With the increased adoption of open source, people want to see the code and have increased control over their data.

The industry is working through changes in how data systems are built and managed:

Timeliness: From batch to real-time data pipelines and processing

Connectivity: From one: one bespoke integrations to many:many

Centralization: From centrally managed to self-serve tooling

Automation: From manually managed to automated tooling

Data management is increasingly critical to firms’ ability to win, serve, and retain their customers. During COVID, the value of data and having a single source of truth has been elevated even further. As a result, organizations are adopting new tools to support larger and more complex data platforms.

I talked to a number of leaders (CTOs, Head of Data & analytics, and junior data scientists) about the biggest barriers to a successful data program and what they are looking into to improve their capabilities to work with data.

1. Data Pipelines

As businesses become more data-driven, coupled with the ever-increasing use of mission-specific SaaS solutions, data sources become more diverse and complex. Data teams don’t have the right tools to manage data in the warehouse efficiently. As a result, they have to spend most of their time building custom infrastructure and making sure their data pipelines work. A data pipeline allows you to consolidate data from multiple sources and makes it available for analysis and visualization. But data pipelines may carry incomplete and inaccurate data, preventing data-driven initiatives from delivering on their expected business outcomes.

New data pipelines use modern computer languages to create reusable abstractions for data processing, to monitor data pipelines, and to visualize the flow of data, the DAG (directed acyclic graph) and enable data scientists to better manage large datasets stored across multiple repositories, efficiently operate data pipeline at scale and proactively detect and resolve pipeline issues.

Innovators are:Dagster, Datameer,Datakin, Aiven, Airflow, Prefect, Ascend.io, Rivery, Mozart Data, Datalogue, Databand

2. Data Catalogs

Data discovery is a big challenge for many organizations. Even after BI adoption and the training of business users, many are heavily reliant on the data team, asking questions like Where can I can this data? How do we calculate this metric? None of the existing vendors truly provide full usage of context and collaboration features to foster alignment between business and data teams.

Catalogs can solve data discovery problems and help you understand its relevance — what data you have, where it lives, how it’s structured, and how it’s being used. Modern catalogs are surfacing this valuable information and adding business context right in the flow of users’ analyses. They are empowering individuals and teams.

Companies: Dataframe, Lyft’s Amundsen (Open Source), Select Star, Zaloni, Atlan, DataWorld

3. Data Collaboration

I am part of a community called Data Angels, a group of young women working in data and analytics. I’ve asked the angels in which areas they’d like to try new tools based on their biggest pain points and the result is clear: Data collaboration

The role of data within organizations is shifting from simple, customized management reporting to that of a fundamental decision-making tool for all teams and functions. Everyone produces and consumes data, and that leaves room for so much collaboration.

Currently, the best business intelligence tools focus on creating insights but fall short when it comes to the debates and decisions that follow, which are fragmented across channels. Many companies that are focused on making data analysis available and understandable to a broader swath of employees, like Snowflake and BigQuery, Looker, Domo try to add collaboration but their products were not built for that and only a few tools have true collaboration features built-in vs. simply sharing/exporting reports. Data collaboration features must improve both the speed and quality of insights.

A collaboration layer on top of a centralized BI hub has the potential to become the single source of truth. By allowing analysts to tag, recommend, and share data sets, patterns, organizing queries, reports, and work histories in one place — democratizing access — people to leverage existing work and it frees up time for data scientists to tackle new problems as a team.

Companies:Graphy*,Mode Analytics, Habr,Dataform, PopSQL, Dataiku

4. Data Quality

Data and analytics leaders are facing pressure to provide “trusted” data that can help business operations to run more efficiently and make business decisions faster and with greater confidence. Data quality issues often are often the result of database merges or changes in the source system. Bad data quality affects decision-making, business objectives, logistics, customer loyalty, brand reputation and a lot more.

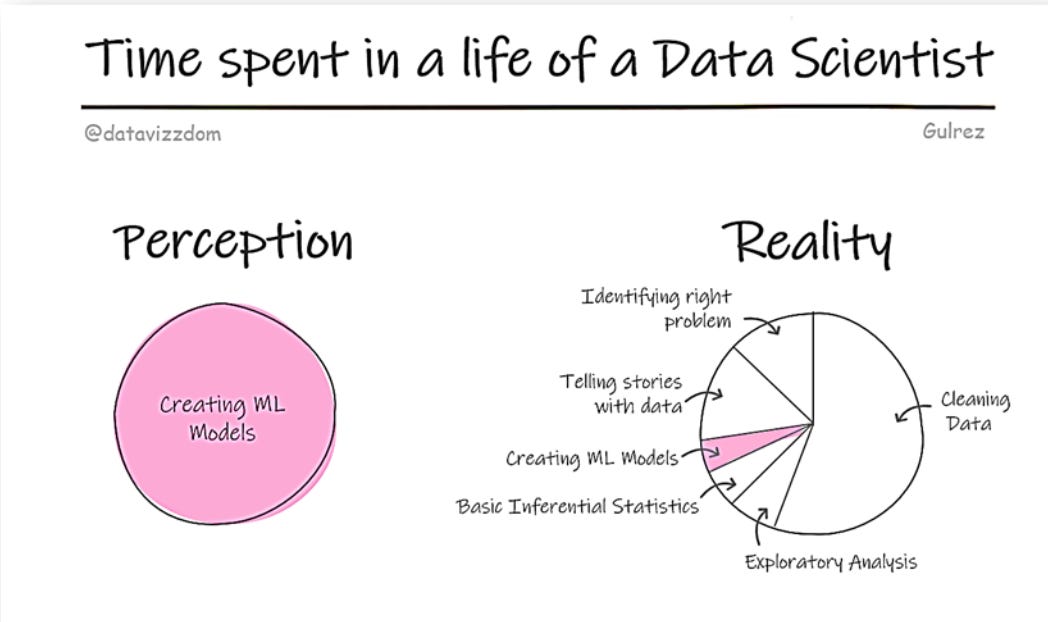

Data monitoring is still a blank spot and most organizations shockingly do not measure the quality of their data, so they do not know that they have a problem and where to improve it. The data platform or engineering team must manually identify the error and fix it. This is time-consuming, tedious work (taking up to 80% of data scientists’ time), and it’s the problem data scientists complain about most.

New tools help to identify and prioritizing data issues that are causing your business the most damage and walk you through a resolution workflow so that you can fix them before they hit production.

We also see that the role of the data product manager, who runs data quality checks and resolves problems before they do damage, is increasingly important. Companies need to establish data-quality-related roles that are critical to the success of data quality initiatives. Ownership for data needs to lie within the business unit and data quality should no longer be seen as a technical issue.

Companies: Soda Data, Toro Data, Monte Carlo Data, Datafold, Spectacles, Hubble

5. Data and Analytics Worlds Collide

Increasingly, analytics producers and consumers will be able to progress from data to insight to model to action in a single flow. Analytics and BI platforms are colliding with data science and machine learning. Data integration, data quality, data profiling, data cataloging and database are colliding.

Data engineers often work closely with data scientists, data analysts and ML engineers, so it’s important that they understand each of their tooling. Furthermore, the omnipresent decentralization of data and analytics use cases leads to many part-time and hybrid roles across lines of business, including IT, which increases complexity. The trend toward continuous intelligence will create new responsibilities and roles for those managing data and analytics.

In a recent post by Eugene Yan, Applied Scientist at Amazon, he argues that data scientists should strive to be more end-to-end, instead of specializing in just one segment of the stack. This can lead to more context, reduce communication and coordination overhead, and lead to faster iteration, greater ownership & accountability — more value, faster.

“It’s difficult to be effective when the data science process (problem framing, data engineering, ML, deployment/maintenance) is split across different people. It leads to coordination overhead, diffusion of responsibility, and lack of a big picture view. […] I believe data scientists can be more effective by being end-to-end.”

— Eugene Yan

Go-To-Market Motion and the growing impact of community

Priyanka Somrah 🦋 at Work-Bench recently dived into a number of Data tools and their GTM motion. In short: “It used to be all about having a heavy direct sales team for data companies to go-to-market. Today, we see a variety of approaches including bottoms-up and open source usage.”

“Community engagement across open source and Slack channels is a key strategy for data tools to unlock user adoption and drive sales and marketing. The majority of new data companies that have an open-source offering maintain an active community Slack.” Priyanka finds.

Questions that buyers will ask when evaluating a data management solution:

What is your main area of focus and what does your solution not do? Data products sell into a crowded space. You don’t only fight direct competition, but adjacent products that go after the same budgets/attention. Given the sheer number of data tools that have emerged over the past few years, it’s become harder to figure out who does what. Buyers want to know where your solution fits in their modern data stack.

What kind of data do I need to analyze? Is the majority of the buyer’s data transactional? Is it all structured? If so, a traditional or “legacy” tool may be the best fit for their use case. They will take into account the types of data that run through their business and then match that up with the appropriate provider.

Cloud, on-prem, or both? Cloud isn’t just big, it’s also hybrid. Technical users are lured to the cloud because of its speed, scale, elasticity, and low level of maintenance, while business people are drawn to its agility, low cost, and ability to support new data-driven business practices. On-prem data management is certainly not dead, but a hybrid approach is increasingly more common, even if cloud exposure is currently limited.

Which use cases do I need to focus on? What will the impact look like? What does the deployment of a data management tool allow them to do differently? (eg. improving data quality; lowering the cost of storage and management; delivering trusted data) Focusing on specific use cases helps to ensure that the implementation of your tool helps move the needle along the desired path. The impact should be measurable, but it will also require collaboration amongst users. The expansion of data volumes and velocities should always result in an end goal of expanded business value.

What level of data security do I need? Data protection capabilities can be expansive and software buyers like to know up front whether or not securing their stored data is a priority to the degree that paying for it represents.

Will data management help me maintain compliance? Companies, in particular those that are operating in a highly regulated industry, are looking for solutions that ensure certain data types remain compliant with ever-growing regulations and that they can automate that process.

I hope that this article has shed some light on the data hype and our modern data-tooling ecosystem. We are excited by all the innovation in space and continue to track this space for new investment opportunities. If you’re building something new, please reach out. 🙋♀️